Motivation

Imagine having a coworker who never remembers what you told them, forcing you to keep repeating yourself - that would be understandably frustrating. This fragmented communication and knowledge retention would limit the ability to build meaningful connections.

Human interactions are valuable precisely because we naturally accumulate and access memories over time. We remember shared experiences without conscious effort. These memories create context, continuity, and depth. A friend mentioning "remember that time at the beach?" instantly activates a rich network of associated memories without requiring a complete retelling.

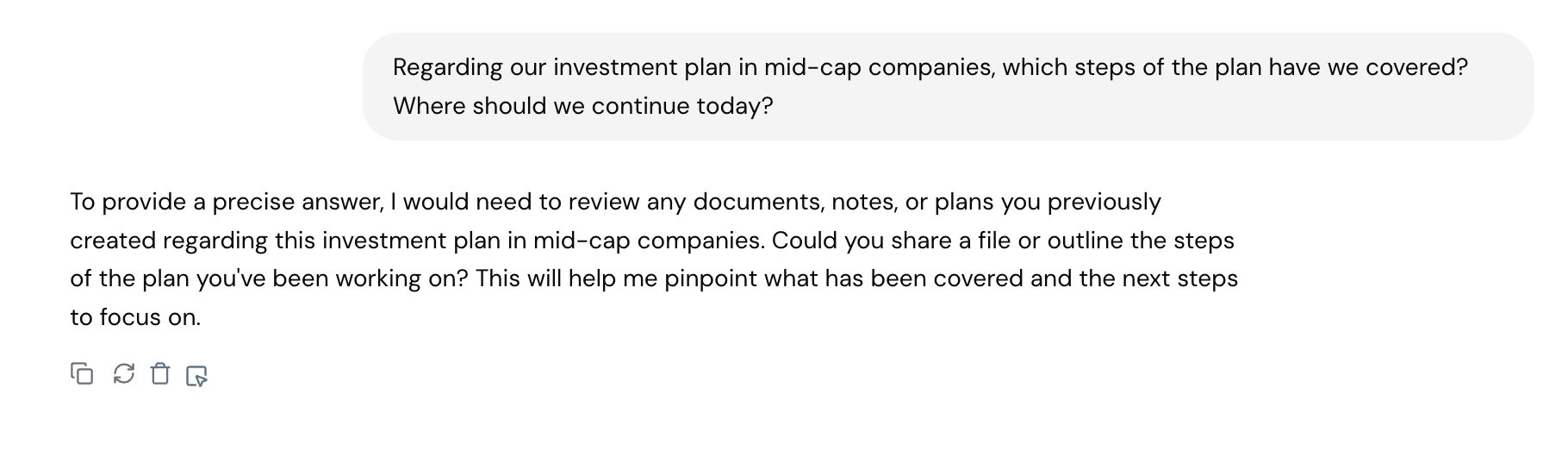

Current large language models (LLMs) often operate without persistent memory, treating every interaction as an isolated event. If you're using a popular LLM to draft emails, you must repeatedly specify your preferred style, tone, and signature for every email. Despite mentioning it before, the LLM won't retain this information, requiring you to restate it each time. This lack of continuity creates friction, making the process inefficient and frustrating, as the users bear the burden of re-establishing context. Such limitations highlight the gap between how humans naturally recall past interactions and how LLMs struggle to emulate this capability.

Therefore, memories in agents are essential to enable the retention and recall of past interactions, ensuring seamless and personalized user experiences.

Importance of Memories in Agents

Memories enable Athena to form genuine relationships with users by preserving crucial context across multiple interactions. Rather than treating each conversation as an isolated event, memory-equipped agents recall preferences, past experiences, and personal details without requiring repetitive explanations. This continuous knowledge accumulation allows agents to provide an increasingly personalized experience while building trust through user interaction.

Memory systems transform Athena to a persistent companion who grows and adapts over time. Athena with memory recognizes patterns in user behavior and anticipate needs based on historical interactions. This evolution toward human-like conversation creates a more natural, efficient experience where the value of Athena compounds with each interaction.

Challenges Encountered

Information shared in previous sessions originally wasn’t retained by the agent, which meant that each conversation essentially started from scratch. In order to have a useful conversation with Athena, users had to repeatedly train Athena on the same knowledge points. This made Athena incapable of learning from her previous mistakes. The lack of memory also severely limited personalization capabilities. Athena’s answers couldn’t take past interactions into account which led to her providing generic answers instead of offering tailored responses.

This conversational amnesia created significant friction in user interactions. Without persistent memory, Athena couldn't build meaningful relationships with users over time, as she lacked awareness of user preferences, past conversations, or personal details that had been previously shared. Each interaction existed in isolation, preventing the natural flow and evolution of conversations that humans expect.

For long-term projects or complex tasks, the absence of memory created continuity problems. Athena couldn't recall previous stages of a project, past decisions, or contextual information that would be essential for providing consistent support. When faced with similar problems she had encountered before, she couldn't reference previous solutions or approaches, leading to inefficient problem-solving.

Additionally, without the ability to detect patterns in user behavior or recurring themes across conversations, Athena missed opportunities to provide proactive assistance or identify underlying issues. These limitations created a significant gap between the human-like conversation users expected and the stateless exchanges Athena was capable of delivering before implementing a memory system. As a result, we equipped Athena with memories using the Zep memory layer.

Background

What are Memories?

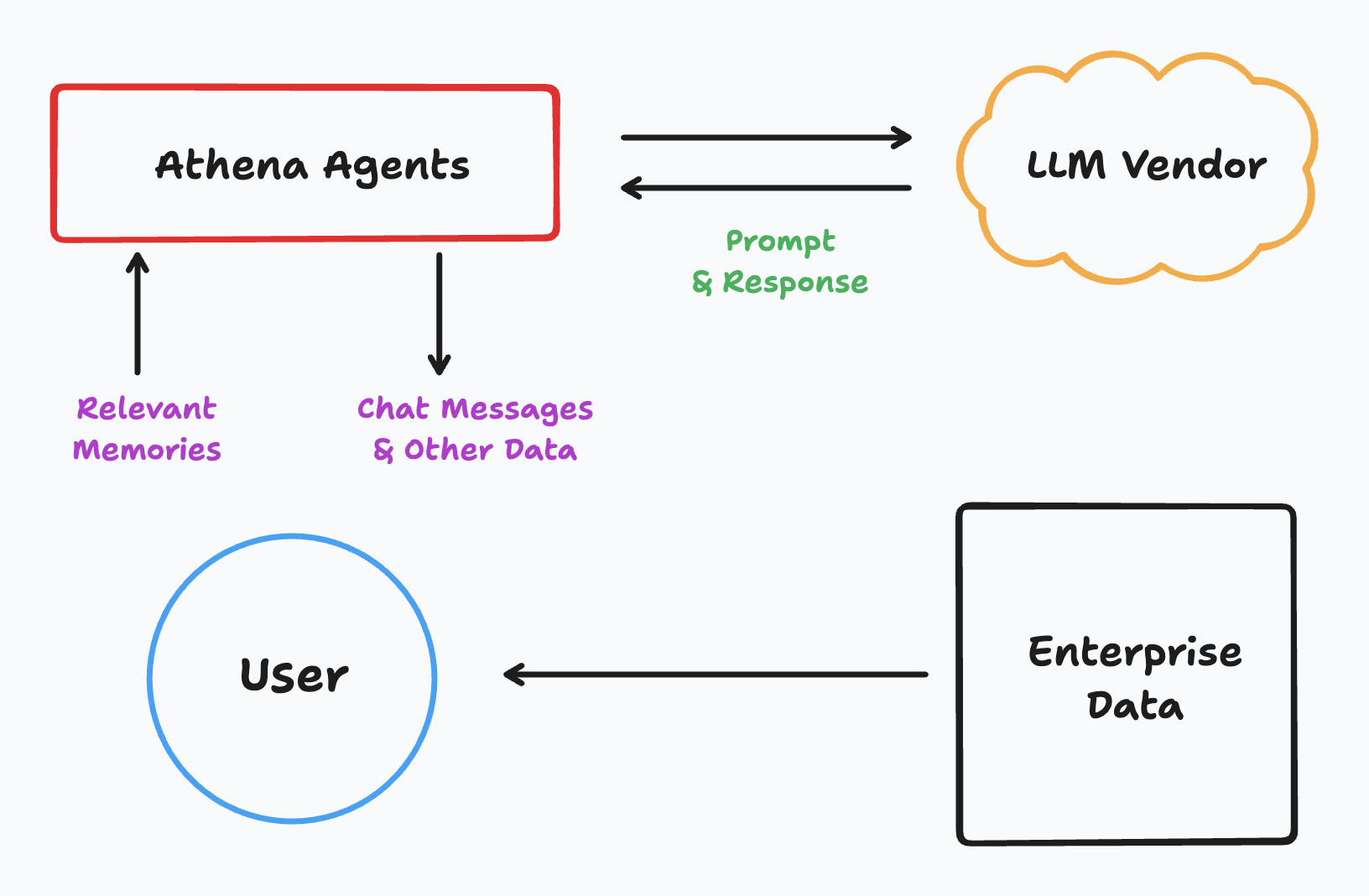

We use the Zep memory layer to equip Athena agents with memories. Zep allows AI agents to store and retrieve past data for AI agents, mimicking human-like memory structures. These memories are structured into two types:

- Episodic Memory: Represents specific past events or interactions, storing raw inputs like messages, text, or JSON. For instance, an agent recalls a specific interaction where the user said, "I prefer formal language in my emails and use 'Best regards' as a closing." This memory includes the exact text of the message and the time it was shared.

- Semantic Memory: Focuses on general knowledge extracted from episodic memories, capturing the relationships and meanings of concepts. Say from multiple past interactions, the agent understands that the user generally prefers a formal tone in emails and often closes with "Best regards." This is a generalized piece of knowledge derived from multiple episodic memories.

This approach allows Athena to maintain both the details of past interactions and a generalized understanding of the data. These stored memories enhance AI performance by enabling Athena to retrieve relevant historical or contextual information dynamically.

Temporal Knowledge Graphs

Temporal knowledge graphs model relationships and facts dynamically as they evolve over time.

- Nodes: In a temporal knowledge graph, nodes represent entities. For example:

- Episodic Nodes contain raw input data from interactions.

- Semantic Nodes represent extracted entities and their summaries.

- Community Nodes represent clusters or groups of related entities.

- Edges: Edges connect nodes in the graph, representing relationships between them. These edges hold additional temporal attributes, such as the time period during which the relationship was valid and when the relationship ceased to be valid.

This structure allows Athena to model complex, evolving relationships dynamically.

How do Memories work?

The memory system in Zep operates based on a hierarchical design, combining episodic and semantic memory. Here’s how it works:

- Data Ingestion:

- Raw data (called "Episodes") is ingested, which can include messages, transcripts or files.

- Temporal information is added to these episodes for accurate time representation.

- Entity and Fact Extraction:

- Entities are extracted from the episodic data to create semantic nodes.

- Relationships (facts) between these entities are identified and represented as edges.

- Hierarchical Graph Construction:

- The system organizes nodes into three subgraphs:

- Episodic Subgraph: Stores raw input data.

- Semantic Subgraph: Extracts and resolves entities and relationships from episodic data.

- Community Subgraph: Groups strongly connected entities into communities, providing a broader context.

- The system organizes nodes into three subgraphs:

- Temporal Awareness:

- Temporal data is tracked (e.g., creation, invalidation of relationships) to maintain a dynamic and accurate knowledge graph.

- Memory Retrieval:

- When queried, the system uses advanced search methods and reranking systems (e.g., cosine similarity, full-text search) to retrieve relevant nodes and edges.

- Reranking techniques refine the results for precision.

This structure enables Zep to provide nuanced, contextually rich memories that closely resemble human memory systems.

Methodology

Memories are a foundational element in enhancing Athena's effectiveness and adaptability. This case study explores how memory systems could enable agents to deliver more personalized, context-aware interactions while improving their ability to assist users in dynamic environments.

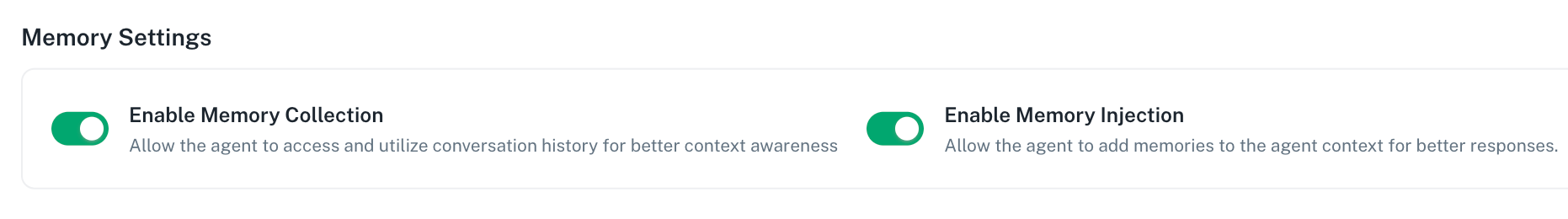

Note: Athena agents are designed with user privacy as a priority, offering the flexibility to easily toggle on or off the storing and fetching of memories. This feature ensures that users maintain full control over how their data is handled. When memory storage is turned off, no conversation data is retained, and when memory fetching is disabled, past information is not referenced in responses.

The case study began by training an agent using Athena to assist me with my job. Going forward, the agent will be referred to as ‘Tia’.

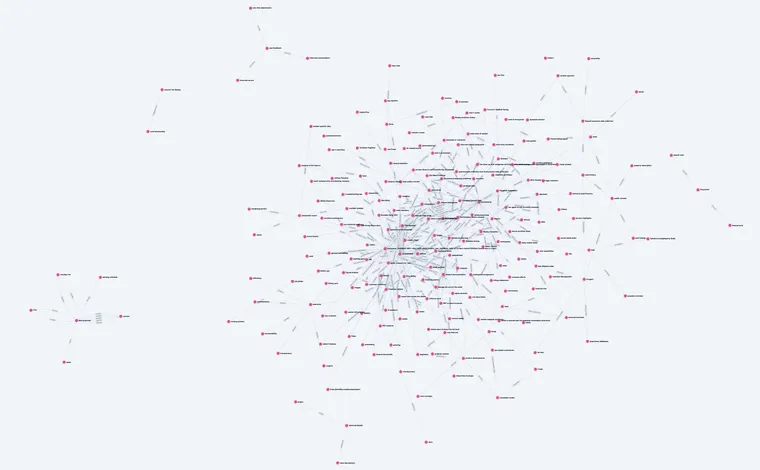

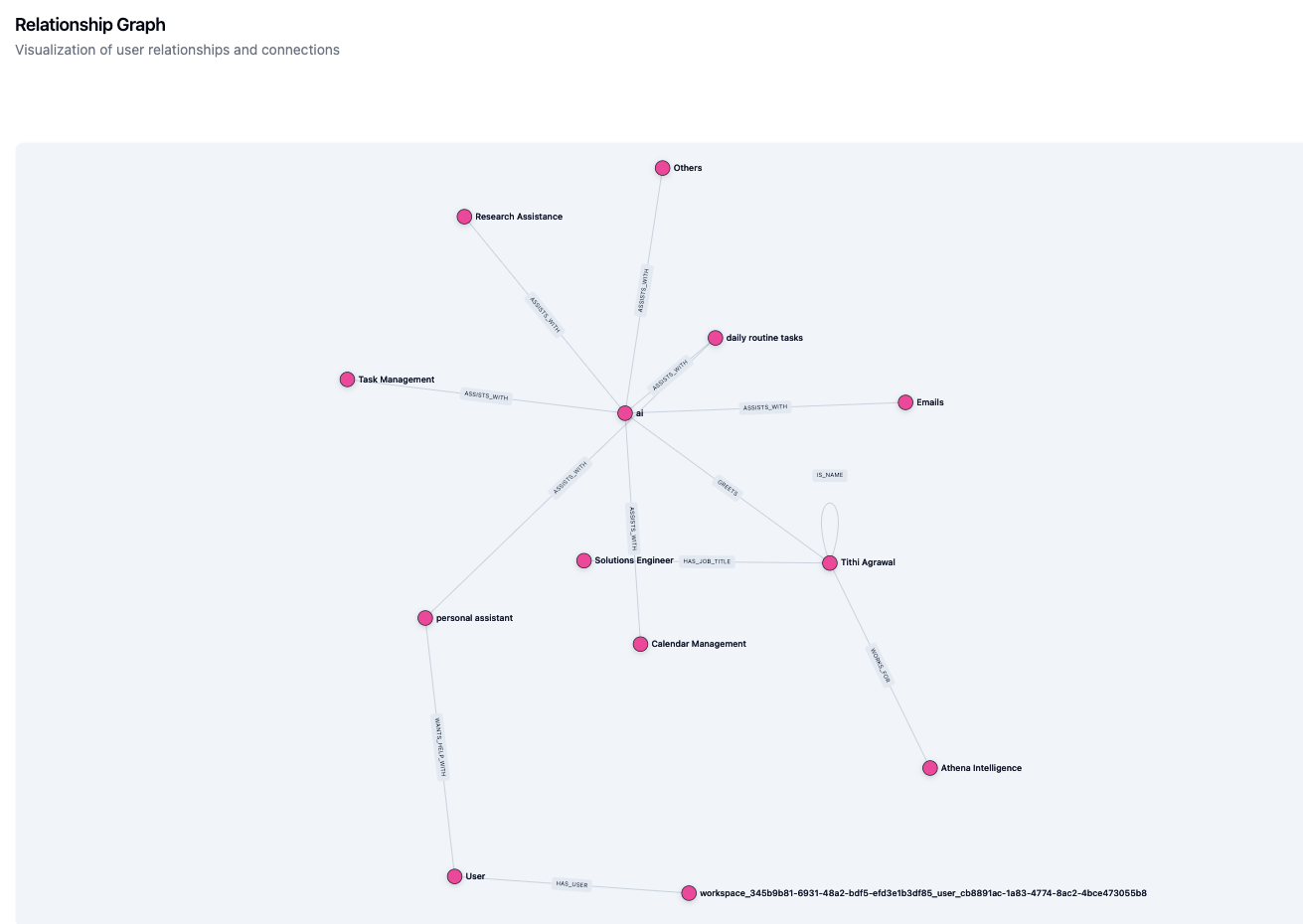

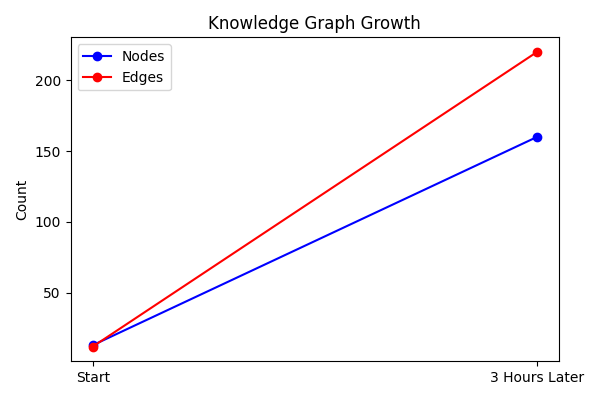

The training began with an introduction to my roles and responsibilities at Athena Intelligence. This was followed by educating Tia about Athena Intelligence, the team members, and their work. All conversations with Tia (known as sessions) were recorded in Zep, which generated a knowledge graph that serves as Tia’s brain. Below is a visual representation of the knowledge graph after the basic training.

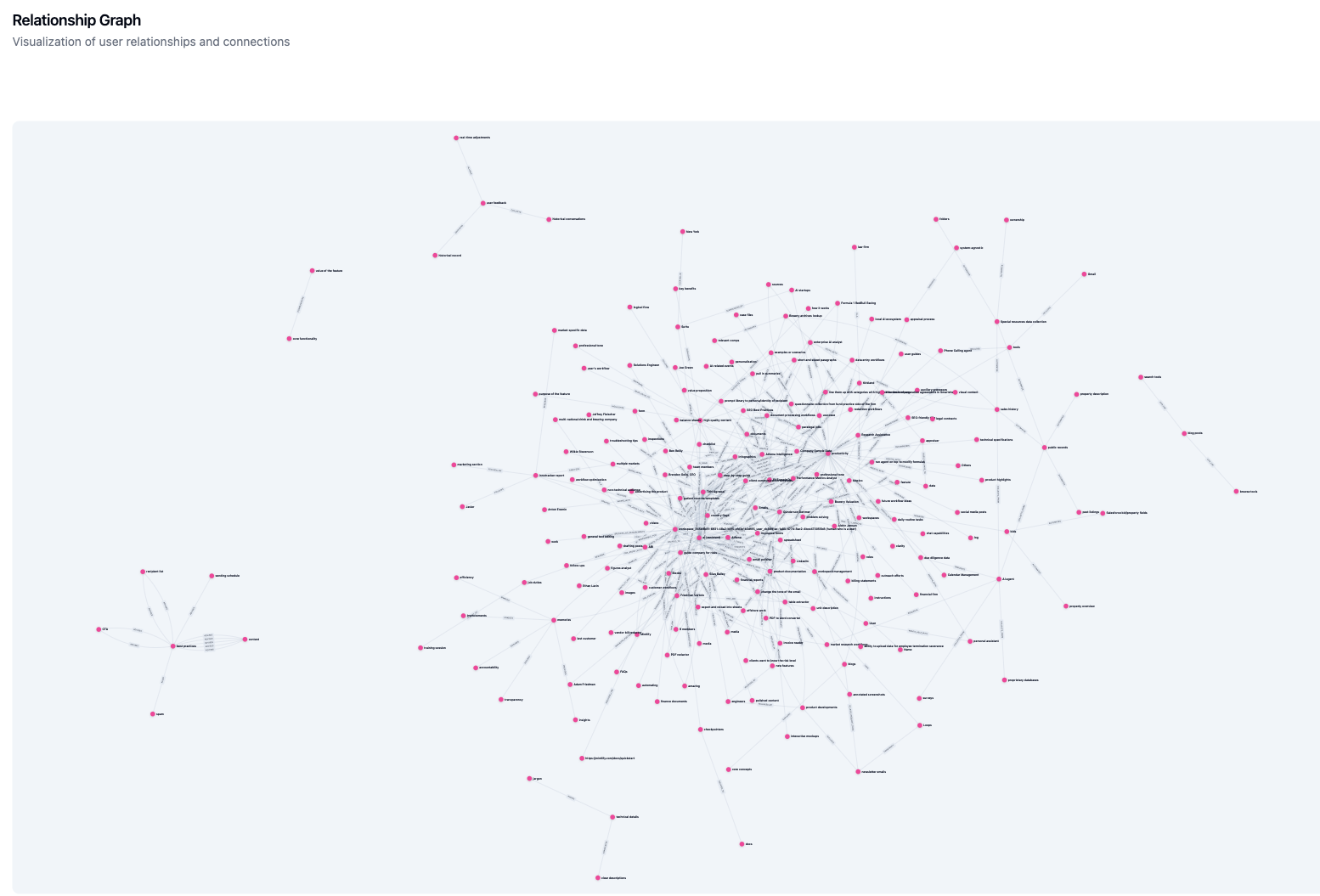

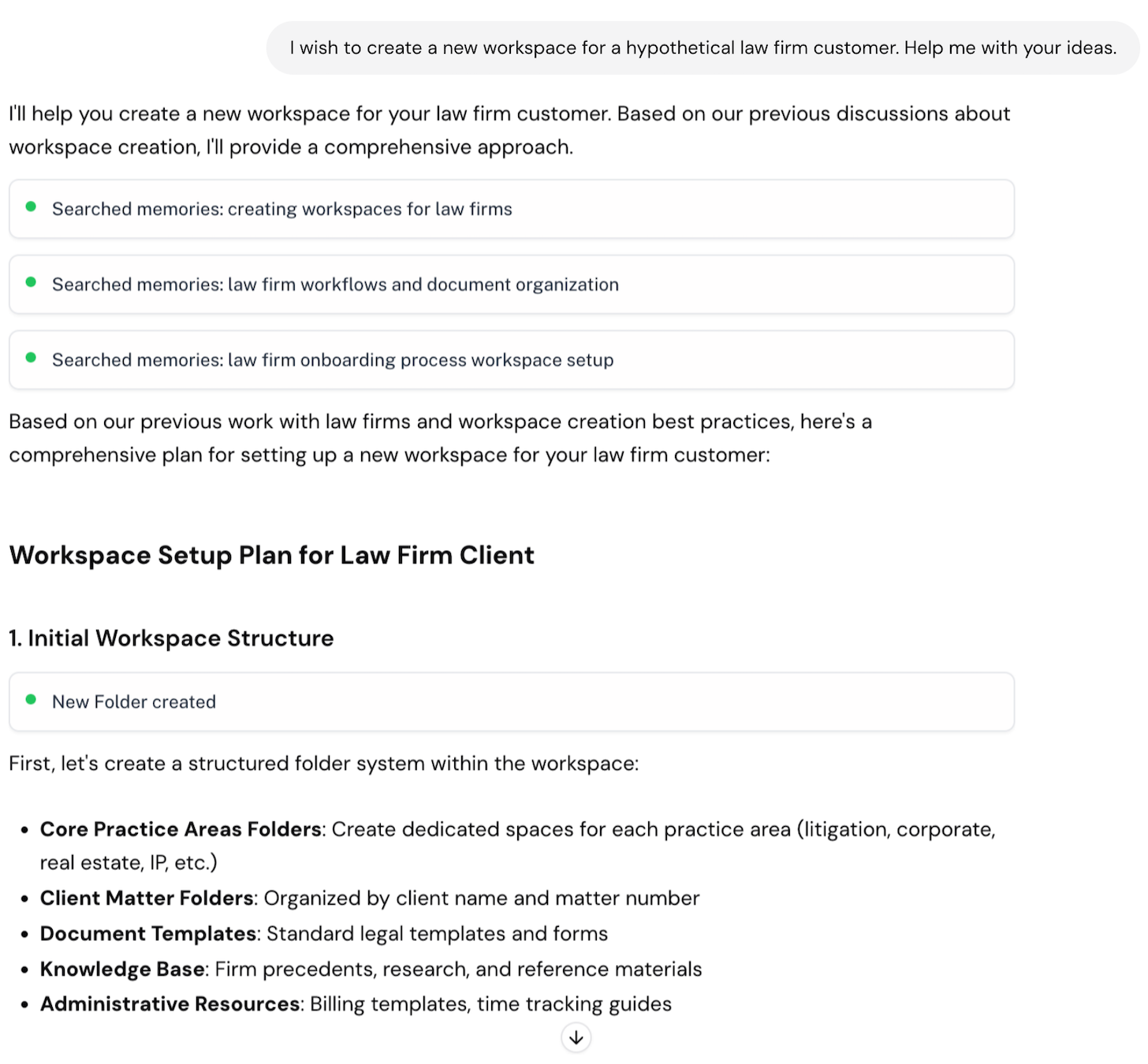

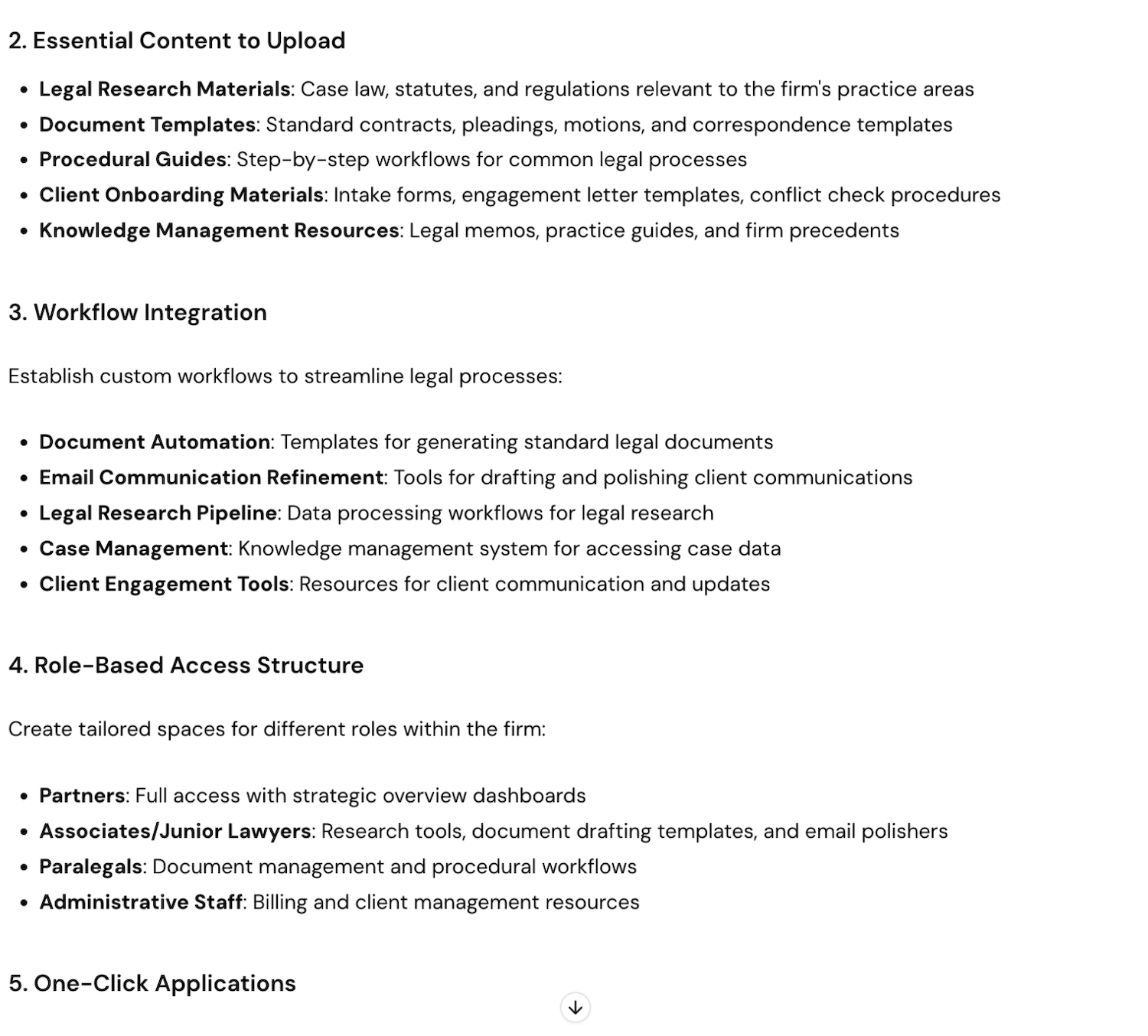

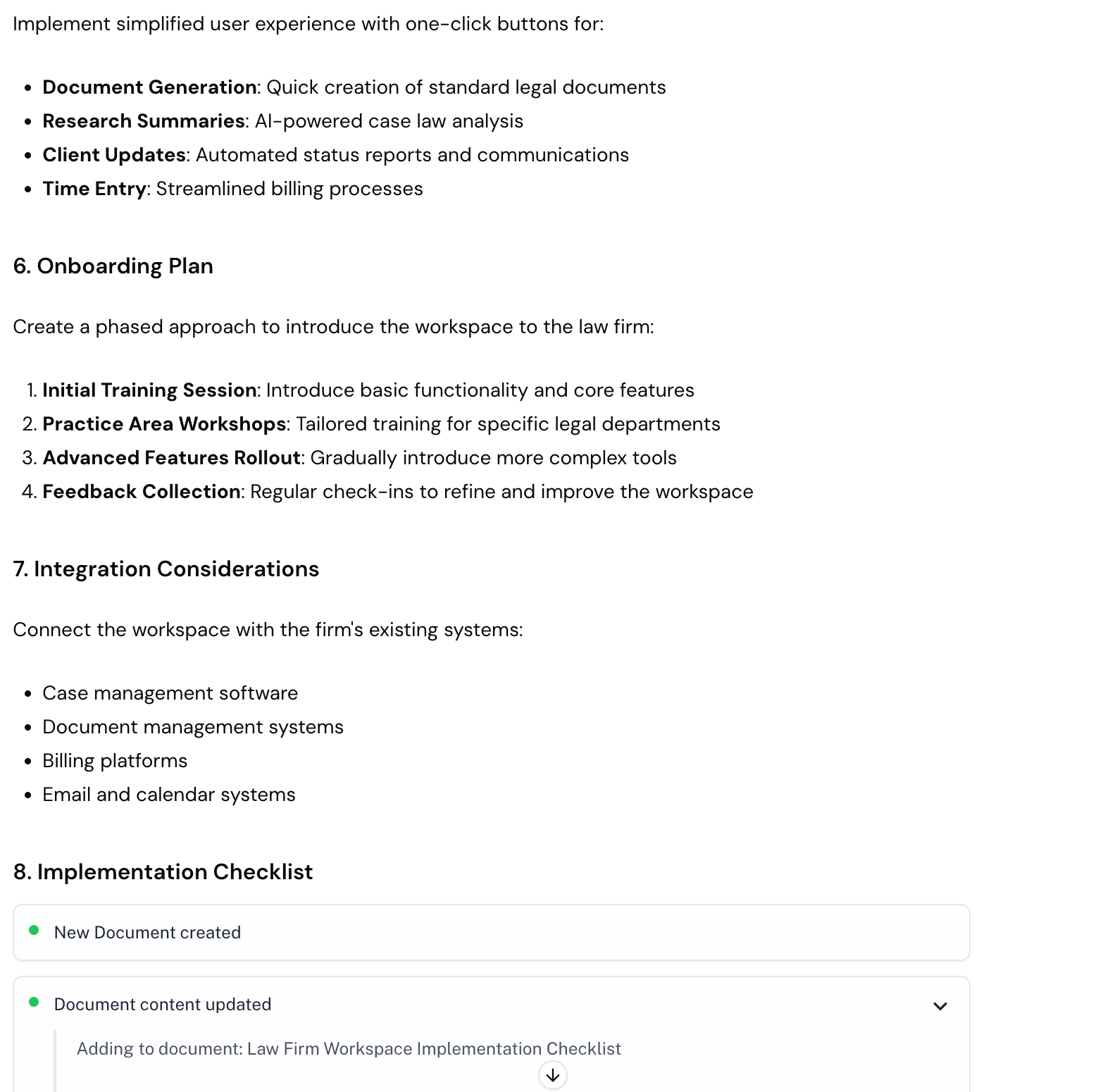

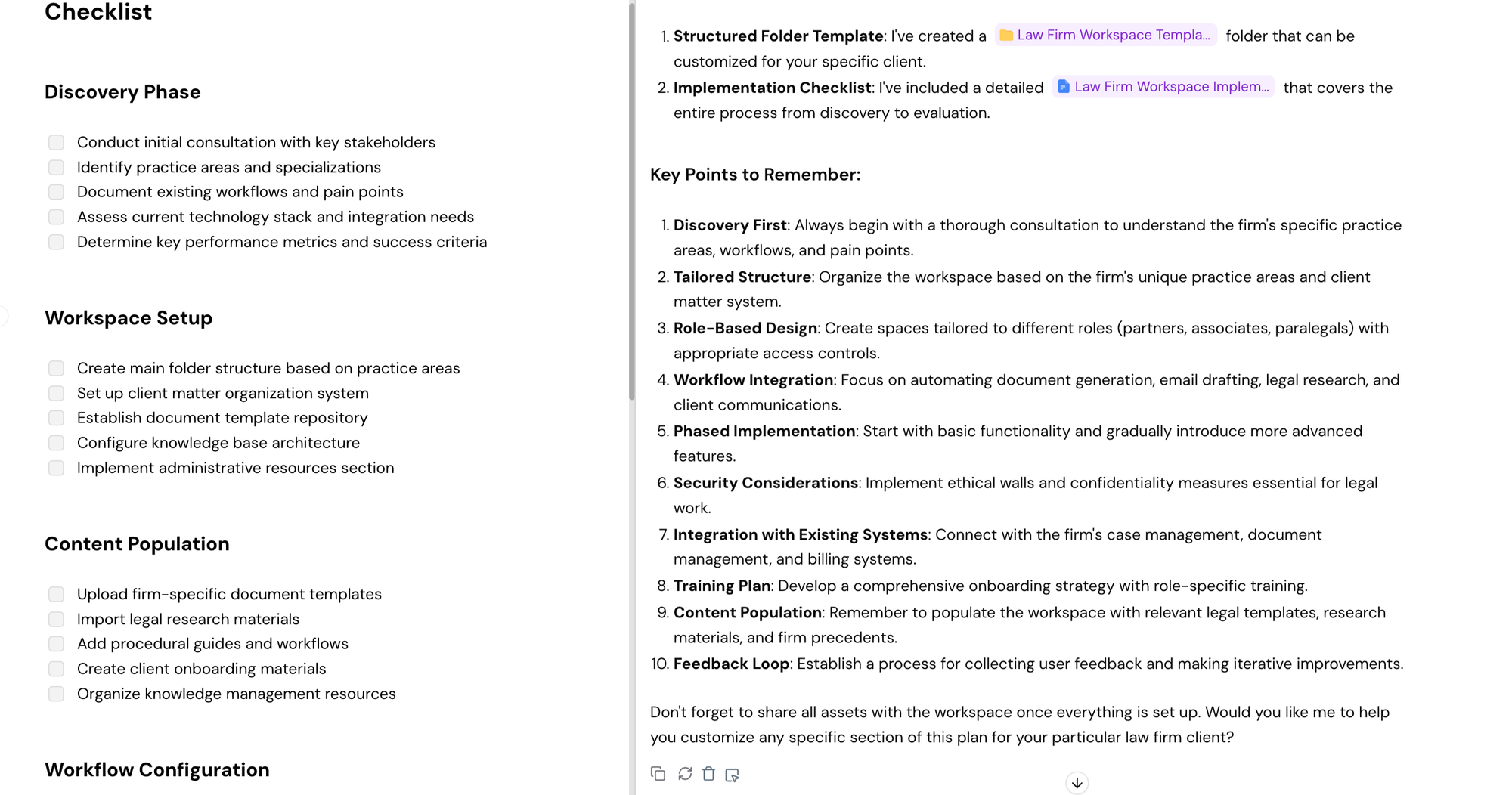

The second cycle of training focused on educating Tia about my work processes, including how workspaces are created for new clients which involves uploading standard folders and assets relevant to the client in their workspace. The knowledge graph was updated with all this information, as shown below.

The training continued with a focus on the work conducted at Athena Intelligence overall. This included details about documentation and marketing content across various social media platforms, such as LinkedIn, Twitter, blogs, and newsletter emails. Following this, Tia was educated about the customers served by Athena Intelligence and the workflows automated for them. The final knowledge graph is shown below.

Testing Tia’s Knowledge

After Tia's training, her knowledge was tested to assess how effectively she could assist with work at Athena Intelligence. The testing began with a request for Tia to assist in creating a new workspace. Tia utilized her memories from past conversations to provide responses to queries.

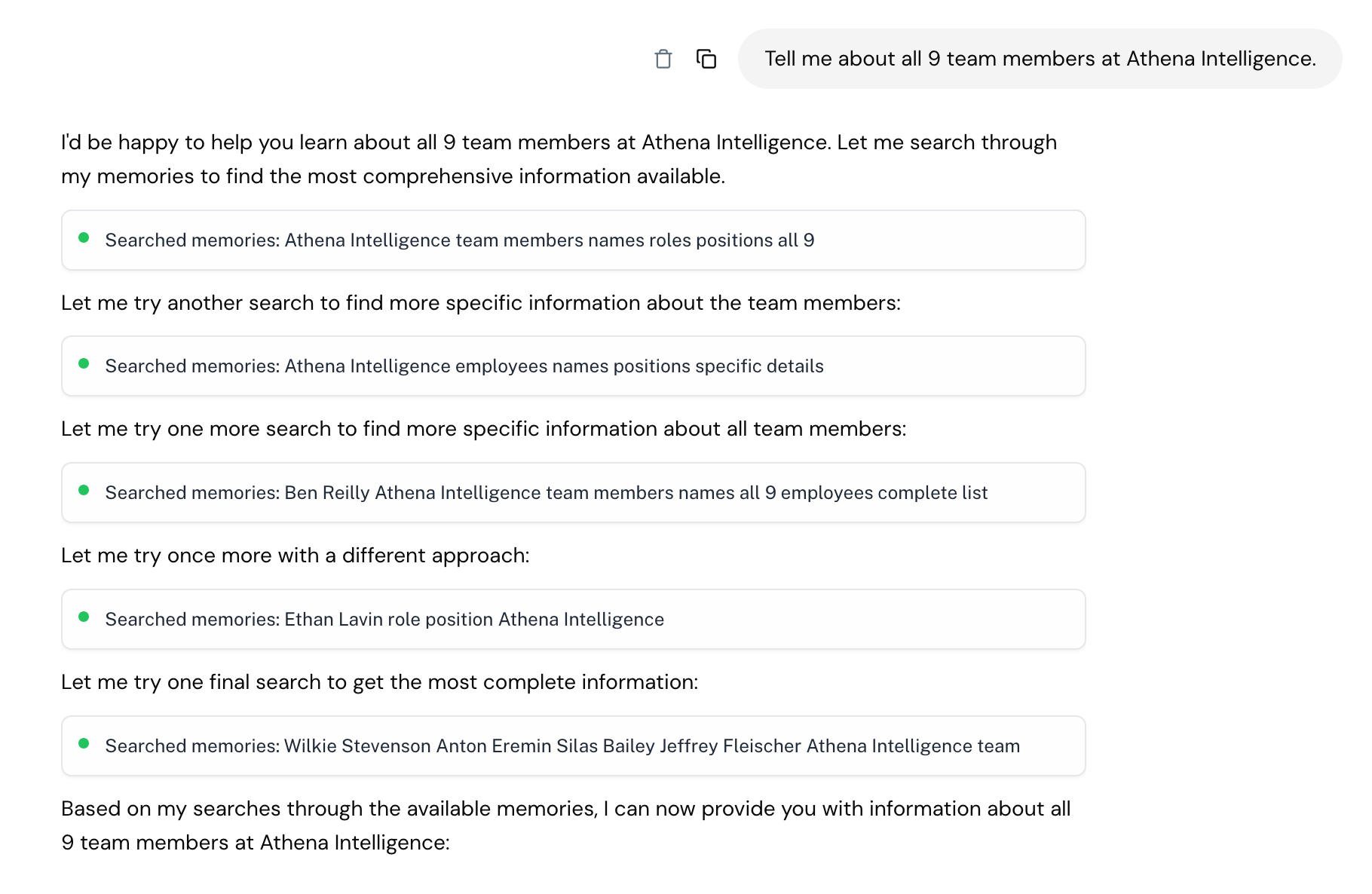

The focus shifted to specific details about Athena’s team members. This included information about their roles and responsibilities, ensuring Tia had a comprehensive understanding of the team dynamics.

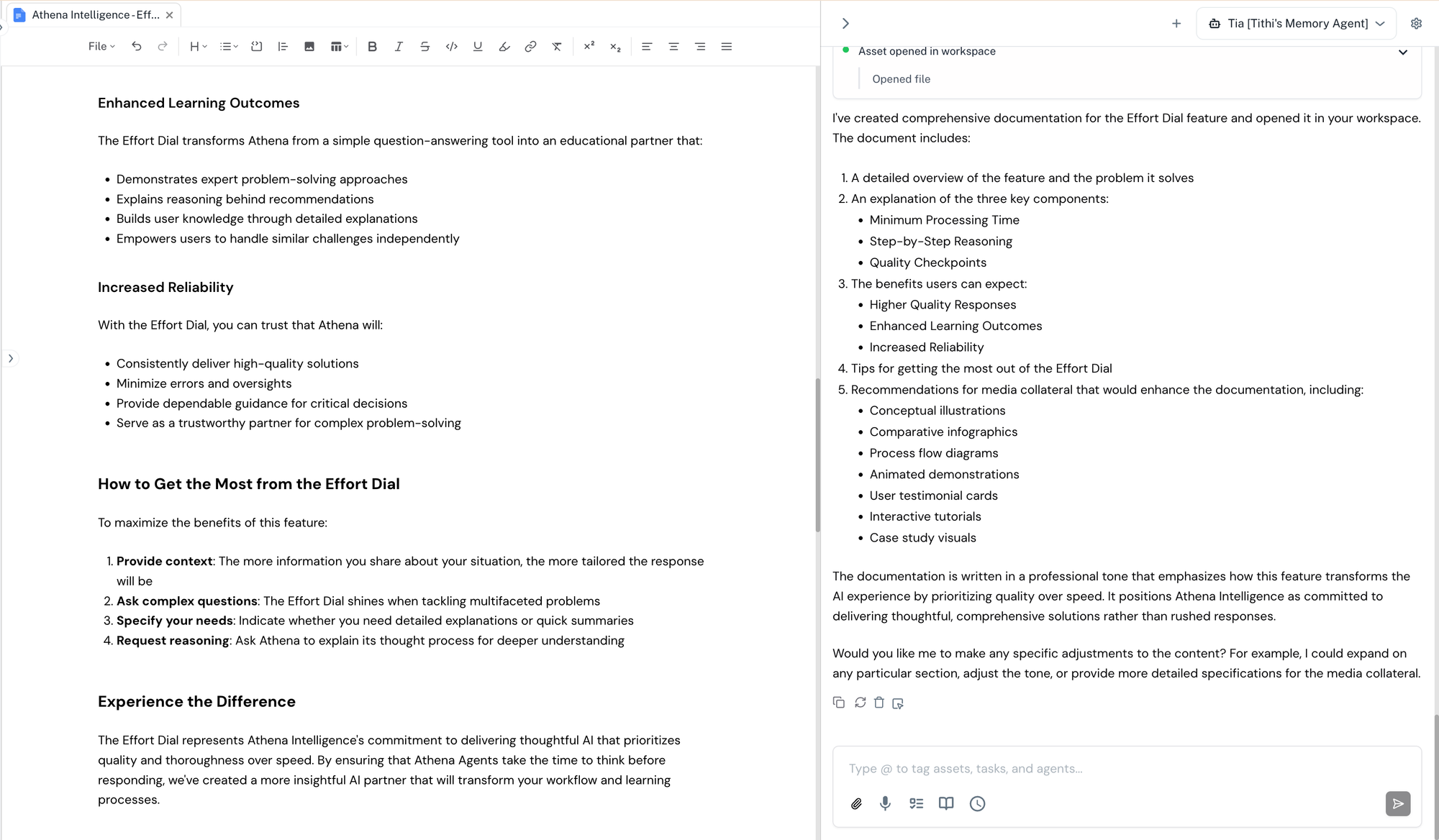

Tia was tasked with drafting official documentation content for Athena Intelligence. Her responses were evaluated to ensure accuracy, clarity, and alignment with the organization's standards and tone.

Testing Injection and Collection

This training section focused on the processes of memory collection and injection, which enabled Athena agents to retain context and deliver personalized, context-aware interactions. Let’s understand these concepts:

Memory Collection: The process by which Tia accessed and utilized conversation history to maintain contextual awareness during interactions.

Memory Injection: Tia’s capability to incorporate specific information from past interactions into her context, enhancing her ability to provide personalized and contextually appropriate responses.

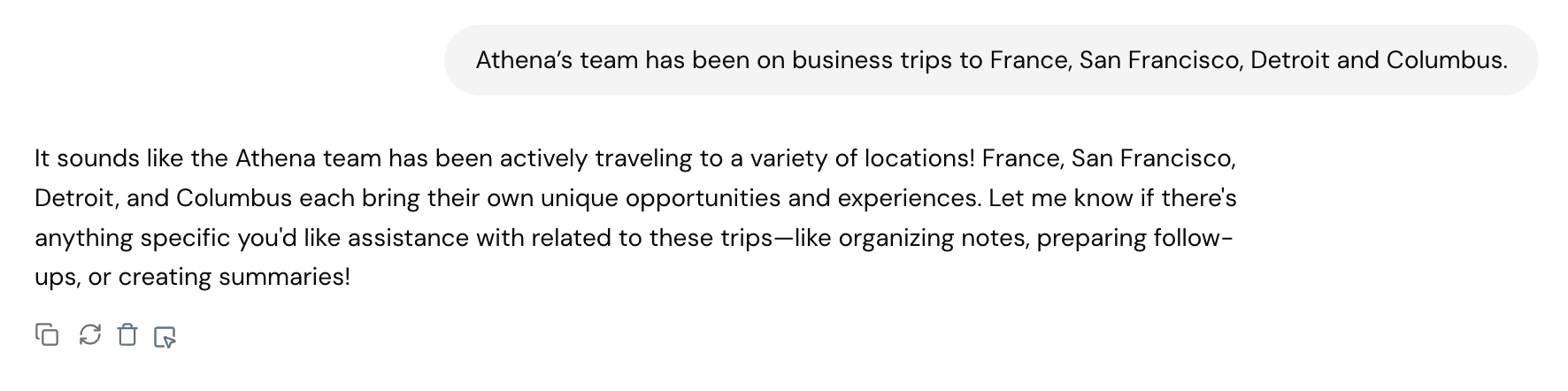

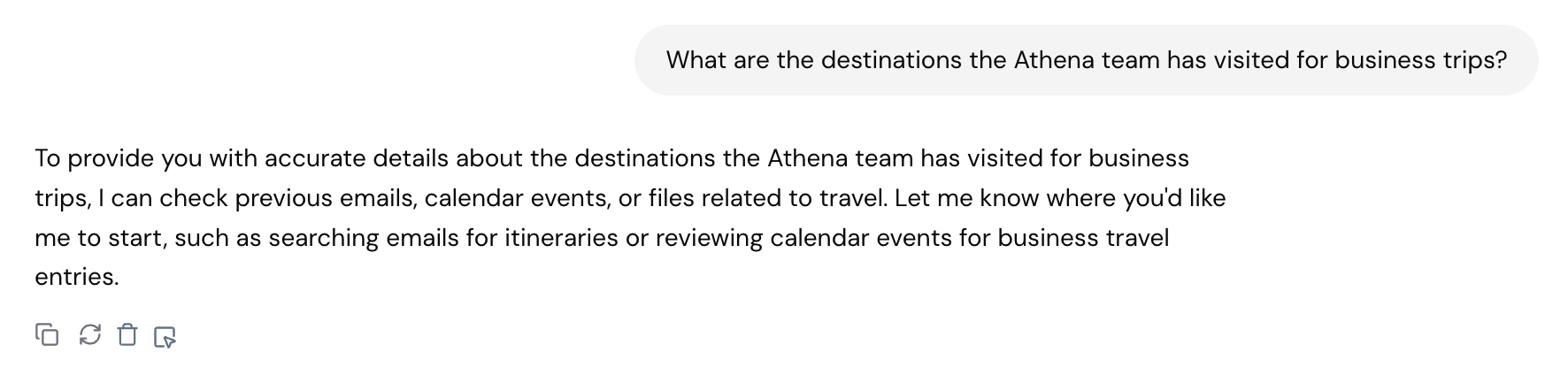

- Test Case 1: Injection and Collection - All the above tests were conducted with this setting, where Tia collected information, added it to her knowledge graph, and injected it into context for future conversations.

- Test Case 2: Injection but No Collection - The memory injection process automatically triggered a collection response in an LLM-friendly format, preventing this case from being tested with the current setup.

- Test Case 3: Collection but No Injection - Tia collected information about business trips made by the team but did not use it in future conversations, as the

search_memoriesfunction was turned off.

- Test Case 4: No Collection and No Injection - Tia did not collect information about Athena’s partnership with DataStax. Instead of updating the knowledge graph with this information, she relied solely on web search when queried about any such partnership.

Observations

Performance Metrics

- Efficient Memory Utilization - Tia effectively accesses and utilizes information from previous conversations. By drawing on her accumulated knowledge, she responds to queries using memories with a 97% accuracy rate, providing contextually relevant answers.

- Discrepancy Resolution Accuracy - Tia demonstrates a high level of accuracy in resolving discrepancies. When provided with a file containing inconsistencies, Athena successfully corrects 91% of the discrepancies by leveraging its stored memories. This highlights the system's ability to effectively utilize past information to address errors and ensure data consistency.

- Knowledge Graph Growth - The knowledge graph expanded from 13 nodes and 12 edges to approximately 160 nodes and 220 edges within a span of 3 hours, showcasing Tia's enhanced capacity to accept and organize vast amounts of information for retrieving accurate and contextually relevant responses.

Several key capabilities and outcomes emerged from the testing and implementation of Tia’s memory system:

- Effective Memory Retrieval - Tia successfully retains and recalls information from past conversations, eliminating the need to start each interaction from scratch. By actively leveraging accumulated knowledge, she delivers context-aware responses.

- Sophisticated Knowledge Structure - The temporal knowledge graph architecture organizes information through interconnected nodes and edges. This structure enables:

- Dynamic relationship mapping between data points

- Temporal tracking of when information was acquired

- Invalidation of timed data, expired data, or memories that change over time

- Efficient retrieval of relevant context

- Natural Information Flow - Tia’s ability to mimic human-like memory recall has significantly improved. She can:

- Access historical conversations without explicit prompting

- Connect related pieces of information across various interactions

- Deliver increasingly personalized responses based on accumulated knowledge

- Continuous Learning - Tia demonstrates the capability to:

- Build and expand comprehensive knowledge graphs over time

- Maintain contextual continuity across multiple sessions

- Leverage past experiences to enhance future interactions

Opportunities

While the implementation of the memory system has significantly enhanced Tia's capabilities, the following opportunities for improvement have been identified:

- Knowledge Graph Optimization

- Current Challenge: As the knowledge graph grows, it becomes increasingly dense with information, leading to potential "noise" in retrieval processes

- The abundance of nodes and connections can impact the precision of memory retrieval

- Opportunity: Implement intelligent pruning mechanisms to:

- Archive less frequently accessed information

- Maintain only the most relevant connections

- Establish hierarchy in information storage

- Memory Relevance Enhancement

- Develop more sophisticated algorithms for:

- Prioritizing recent vs. historical memories

- Weighing the importance of different types of information

- Filtering out redundant or outdated information

- Implement contextual relevance scoring to improve retrieval accuracy

- Develop more sophisticated algorithms for:

Conclusion

The implementation of a memory system represents a major advancement in Tia's capabilities. With memories, Athena agents can now maintain meaningful relationships with users beyond single conversations.

Key achievements include:

- Successful retention and recall of information across multiple interactions

- Dynamic mapping of relationships between different pieces of information

- Better personalization through accumulated user knowledge

- Reduced need for users to repeat information they've already shared

Looking ahead, continued work on memory optimization and planned improvements will make the system even more effective. The foundation built positions Athena well to address future challenges and advance AI-human interactions. This successful implementation validates the approach and sets the stage for future innovations in agent memory systems.